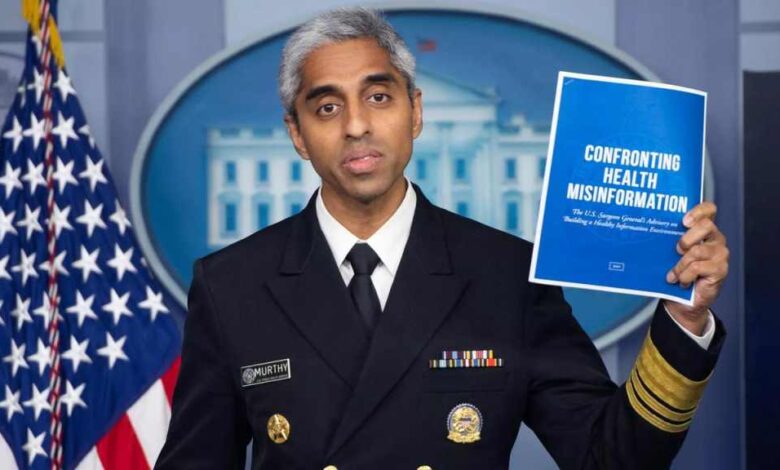

US Surgeon General Wants COVID-19 Misinformation Data From Big Tech

Us surgeon general requests covid 19 misinformation data from big tech companies – US Surgeon General requests COVID-19 misinformation data from big tech companies sets the stage for a complex and intriguing narrative. This request, made amidst a global pandemic, highlights the growing concern about the spread of misinformation online and its potential impact on public health.

The Surgeon General seeks data from social media platforms to understand how misinformation spreads and what strategies can be implemented to combat it. This request underscores the critical role of tech companies in addressing the challenge of misinformation, which has become a significant obstacle in efforts to control the pandemic.

The request has sparked debate about the balance between protecting public health and safeguarding user privacy. While the need to address misinformation is undeniable, some argue that sharing user data with government agencies raises serious privacy concerns. The debate underscores the need for a nuanced approach that balances the public good with individual rights.

This request, while controversial, is a significant step towards understanding the complex interplay between misinformation, public health, and the power of big tech.

The Request and its Context: Us Surgeon General Requests Covid 19 Misinformation Data From Big Tech Companies

The Surgeon General’s request for data on COVID-19 misinformation from big tech companies is a significant step in addressing the ongoing public health crisis. This request aims to understand the spread of misinformation and its impact on public health, paving the way for more effective interventions.The Surgeon General seeks specific data related to the prevalence, reach, and nature of COVID-19 misinformation circulating on social media platforms.

This includes information about the types of misinformation being shared, the demographics of users engaging with it, and the effectiveness of existing measures to combat it.

The Impact of Misinformation on Public Health

Misinformation about COVID-19 has had a devastating impact on public health, hindering efforts to control the pandemic. False information about the virus, vaccines, and treatment options has led to:

- Reduced vaccine uptake, resulting in increased cases, hospitalizations, and deaths.

- Delayed access to effective treatments, jeopardizing the health of infected individuals.

- Increased distrust in public health institutions, hindering efforts to communicate accurate information and promote public health measures.

- Heightened anxiety and fear, leading to unnecessary stress and psychological distress.

The Surgeon General’s request recognizes the urgency of addressing this problem and the need for data-driven solutions to mitigate the negative consequences of misinformation.

Historical Context of Combating Misinformation, Us surgeon general requests covid 19 misinformation data from big tech companies

The challenge of combating misinformation is not new. Public health emergencies throughout history have been plagued by the spread of false information, leading to negative outcomes.

- During the 1918 influenza pandemic, rumors about the cause and spread of the virus fueled public fear and mistrust in official guidance, hindering efforts to contain the pandemic.

- In the early days of the HIV/AIDS epidemic, misinformation about the virus and its transmission contributed to widespread stigma and discrimination, further hindering public health efforts.

The current pandemic underscores the importance of proactive measures to combat misinformation and ensure accurate information reaches the public.

The US Surgeon General’s request for COVID-19 misinformation data from big tech companies is a crucial step towards tackling the spread of harmful narratives. While we grapple with the complexities of information control, it’s equally important to critically evaluate the information we’re being presented with.

A recent article, cdcs risk benefit assessment for new covid 19 vaccines flawed experts , raises serious concerns about the CDC’s risk-benefit assessment for new COVID-19 vaccines, highlighting the need for independent analysis and transparency. This underscores the importance of the Surgeon General’s initiative, as accurate and unbiased information is essential for making informed decisions about our health and well-being.

Misinformation and Its Spread on Social Media Platforms

Social media platforms have become integral to our daily lives, offering a powerful tool for communication and information sharing. However, this very accessibility has also made them fertile ground for the spread of misinformation, particularly during times of crisis like the COVID-19 pandemic.

Understanding the mechanisms by which misinformation spreads on these platforms is crucial to developing effective strategies to combat its harmful effects.

Algorithms and User Engagement

Social media algorithms play a significant role in shaping what users see on their feeds. These algorithms prioritize content based on factors like user engagement, popularity, and relevance. While this system aims to provide users with personalized and engaging content, it can inadvertently amplify misinformation.

For instance, if a false claim about COVID-19 receives a large number of shares, likes, and comments, the algorithm may interpret this as high user engagement and promote it to a wider audience, further disseminating the misinformation.

It’s interesting to see how the US Surgeon General’s request for data on COVID-19 misinformation from big tech companies intersects with Elon Musk’s decision to restrict military use of Starlink in Ukraine, which he justifies by citing the risk of escalating the conflict.

Musk’s reasoning raises questions about the role of technology in modern warfare and the responsibility of tech giants in managing the spread of misinformation, both of which are central to the Surgeon General’s concerns.

- Filter Bubbles and Echo Chambers:Social media algorithms can create “filter bubbles” and “echo chambers,” where users are primarily exposed to content that aligns with their existing beliefs and biases. This can limit exposure to diverse perspectives and make users more susceptible to misinformation that confirms their pre-existing views.

- Trending Topics and Hashtags:Misinformation can quickly gain traction through trending topics and hashtags. When a false claim goes viral, it can be amplified through these mechanisms, reaching a broader audience and increasing its visibility.

- Recommendations and Suggestions:Social media platforms often suggest content based on user interactions and preferences. If a user has previously engaged with misinformation, the platform may recommend similar content, further exposing them to false claims.

The Role of Social Media in Amplifying COVID-19 Misinformation

Social media played a significant role in amplifying misinformation related to COVID-

This was due to a confluence of factors, including:

- Rapid Spread of Information:The speed at which information travels on social media made it difficult to distinguish between accurate and inaccurate information. Misinformation could spread rapidly before fact-checkers and authorities could verify its accuracy.

- Lack of Trust in Traditional Sources:During the pandemic, there was a growing distrust of traditional sources of information, such as government agencies and mainstream media. This led some individuals to seek information from alternative sources, which may have included unreliable or inaccurate information.

- Emotional Appeals:Misinformation often exploits fear, anxiety, and uncertainty, emotions that were prevalent during the pandemic. This made people more susceptible to believing false claims, particularly those that offered simple solutions or explanations for complex situations.

- Examples:

- The 5G Conspiracy:A false claim that 5G technology was responsible for the spread of COVID-19 gained widespread traction on social media. This claim, despite being debunked by scientists and health experts, spread rapidly through social media platforms, leading to attacks on 5G infrastructure in some countries.

- False Cures and Treatments:Misinformation about potential cures and treatments for COVID-19, such as the use of bleach or hydroxychloroquine, was widely shared on social media. These claims, which lacked scientific evidence, led to dangerous health outcomes for some individuals who self-treated based on this misinformation.

Strategies to Combat Misinformation

Social media platforms have implemented various strategies to combat misinformation on their platforms, including:

- Fact-Checking Partnerships:Many platforms have partnered with fact-checking organizations to verify the accuracy of content shared on their platforms. These organizations use established fact-checking methodologies to identify and debunk false claims. Fact-checked content may be flagged with a warning or label to alert users to its potential inaccuracy.

- Content Removal and Labeling:Platforms have policies in place to remove content that violates their terms of service, including misinformation that poses a direct threat to public health or safety. They may also label or flag content that is misleading or inaccurate, providing users with additional context.

- Educational Initiatives:Some platforms have launched educational initiatives to help users identify and avoid misinformation. These initiatives may include providing tips on how to evaluate the credibility of information sources, recognizing common misinformation tactics, and understanding the difference between opinion and fact.

- User Reporting Mechanisms:Platforms encourage users to report content that they believe is false or misleading. This allows the platform to investigate the content and take appropriate action, such as removing it or adding a warning label.

Effectiveness of Strategies

While social media platforms have made significant efforts to combat misinformation, the effectiveness of these strategies is still being debated. Critics argue that these efforts are often insufficient and that platforms are not doing enough to address the root causes of misinformation spread.

“The challenge of misinformation is not just about the platforms themselves, but about the broader ecosystem of information sharing and consumption.”

The US Surgeon General’s request for COVID-19 misinformation data from big tech companies highlights the urgency of addressing online disinformation. While this fight rages on, small business owners are grappling with their own anxieties, with inflation fears among small business owners at highest level in 40 years.

This economic uncertainty adds another layer of complexity to the already challenging task of combating misinformation, as it can further fuel distrust and skepticism, potentially hindering efforts to disseminate accurate information about public health issues.

Some argue that platforms need to be more proactive in identifying and removing misinformation, rather than relying solely on user reports. Others advocate for greater transparency about the algorithms that drive content distribution and the impact of these algorithms on the spread of misinformation.

Impact of Misinformation on Public Health

The spread of misinformation about COVID-19 has had a profound and devastating impact on public health, leading to increased illness, death, and a decline in trust in public health institutions. The consequences of misinformation are far-reaching, affecting individuals, communities, and the global response to the pandemic.

Vaccine Hesitancy and Delayed Medical Care

Misinformation about COVID-19 vaccines has fueled vaccine hesitancy, leading to a significant number of people refusing or delaying vaccination. This hesitancy is driven by a variety of factors, including false claims about vaccine safety, efficacy, and the potential for adverse effects.

The consequences of vaccine hesitancy are severe, as unvaccinated individuals are at a much higher risk of contracting COVID-19, experiencing severe illness, hospitalization, and even death. Misinformation has also contributed to delayed medical care, with some individuals avoiding seeking medical attention due to fear of contracting COVID-19 or mistrust in healthcare professionals.

This delay in seeking care can have serious consequences, as early detection and treatment are crucial for managing COVID-19 and other health conditions.

Impact on Public Trust in Health Authorities and Scientific Institutions

Misinformation undermines public trust in health authorities and scientific institutions. The spread of false and misleading information about COVID-19 has eroded public confidence in the expertise and credibility of public health officials, scientists, and medical professionals. This erosion of trust can lead to a reluctance to follow public health guidelines, such as mask-wearing and social distancing, which are essential for controlling the spread of the virus.

Ethical Implications of Misinformation

Misinformation poses significant ethical challenges, particularly in relation to vulnerable populations. The spread of misinformation can exacerbate existing health disparities, as marginalized communities are often disproportionately affected by the pandemic. It is crucial to address the ethical implications of misinformation and ensure that everyone has access to accurate and reliable information about COVID-19.

Challenges and Considerations for Big Tech Companies

Big tech companies face a complex and multifaceted challenge in addressing misinformation on their platforms. Balancing the need to protect users’ privacy with the responsibility to curb the spread of harmful content presents a delicate tightrope walk. This section explores the key challenges and considerations big tech companies face in this ongoing battle.

Privacy Concerns and Content Moderation

The tension between privacy and content moderation is a central challenge for big tech companies. While the need to combat misinformation is clear, it requires access to user data and content, raising concerns about privacy violations.

- Data Collection and Analysis:To effectively identify and remove misinformation, companies need to analyze user data, including posts, comments, and interactions. However, this data collection can raise privacy concerns, especially if it involves sensitive personal information.

- Algorithmic Bias:Content moderation algorithms, often used to flag misinformation, can be susceptible to biases, potentially leading to the suppression of legitimate content or the amplification of misinformation. This raises concerns about the potential for censorship and the need for transparency in algorithmic decision-making.

- Transparency and User Trust:Big tech companies face the challenge of maintaining user trust while also being transparent about their content moderation practices. A lack of transparency can lead to accusations of censorship or bias, further eroding user trust.

Legal and Ethical Implications of Data Sharing

The potential legal and ethical implications of sharing user data with government agencies are significant. While collaboration is crucial in combating misinformation, it raises concerns about data security, potential misuse, and the erosion of user privacy.

- Data Security and Misuse:Sharing user data with government agencies raises concerns about data security breaches and potential misuse of the information. This underscores the importance of robust safeguards and transparency in data sharing practices.

- Erosion of User Trust:Sharing user data with government agencies can erode user trust in big tech companies, particularly if users perceive that their privacy is being compromised. This can lead to a decline in user engagement and platform usage.

- Legal Liability:Big tech companies may face legal liability if they share user data with government agencies without proper consent or legal justification. This highlights the importance of establishing clear legal frameworks and guidelines for data sharing.

Different Approaches to Misinformation Control

Big tech companies have adopted various approaches to combat misinformation, each with its strengths and weaknesses.

- Fact-Checking and Labeling:This approach involves partnering with fact-checking organizations to verify the accuracy of information and label potentially false content. While this can be effective in highlighting misinformation, it can be resource-intensive and may not address the underlying drivers of misinformation spread.

- Algorithm-Based Detection:This approach utilizes algorithms to identify and flag potentially false content based on patterns and signals. While efficient, it can be susceptible to bias and may not be effective in detecting nuanced or evolving forms of misinformation.

- Community Moderation:This approach relies on users to flag and report potentially false content. While empowering users, it can be susceptible to abuse and may not be effective in addressing large-scale misinformation campaigns.

- Education and Awareness:This approach focuses on educating users about misinformation, critical thinking skills, and how to identify and verify information. While essential for long-term solutions, it can be a slow process and may not immediately address the spread of misinformation.

Closure

The US Surgeon General’s request for COVID-19 misinformation data from big tech companies is a bold move that signals a growing awareness of the dangers of misinformation in the digital age. It highlights the need for collaboration between government agencies, tech companies, and public health organizations to address this critical issue.

While the request raises important questions about privacy and data sharing, it also represents a crucial step towards protecting public health in the face of a global pandemic. The ongoing debate surrounding this request will likely shape the future of online content moderation and the role of technology in shaping public discourse.